| Big Data "Big Data" is generally defined as data sets so large and complex that they are difficult to manage and process using traditional methods. Big data is a core theme in the evolution of the enterprise data warehousing (EDW) and advanced

analytics markets. A growing number of EDW vendors support such key big data features as sharednothing massively parallel processing (MPP), petabyte scaling, and in-database analytics. Most enterprises have built big data initiatives on the tried-and-true approach of EDWs that support

MPP. However, the cost, proprietary nature, infexibility, and scalability issues of some MPP EDWs

have spawned the development of an emerging open source, cloud-oriented approach known as

Hadoop. Originating in the mid-2000s via technologies from Yahoo, Google, and other Web 2.0

pioneers, Hadoop is now central to the big data strategies of enterprises, service providers, and other

organizations.

Te Apache Hadoop community has spawned many promising startups and has resulted in new

products and features from established vendors of MPP EDW platforms, data management tools,

and business analytics. Te Hadoop and EDW markets are rapidly converging as EDW vendors add

Hadoop technologies to their solution portfolios and Hadoop tool vendors build tighter alliances

with EDW providers. Providers of traditional EDW solutions see great advantages in adopting

Hadoop for handling complex content, advanced analytics, and massively parallel in-database

processing in the cloud. Big data is the term for a collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications. The challenges include capture, curation, storage, search, sharing, transfer, analysis, and visualization. The trend to larger data sets is due to the additional information derivable from analysis of a single large set of related data, as compared to separate smaller sets with the same total amount of data, allowing correlations to be found to "spot business trends, determine quality of research, prevent diseases, link legal citations, combat crime, and determine real-time roadway traffic conditions." As of 2012, limits on the size of data sets that are feasible to process in a reasonable amount of time were on the order of exabytes (EB) of data. Scientists regularly encounter limitations due to large data sets in many areas, including meteorology, genomics, connectomics, complex physics simulations, and biological and environmental research.The limitations also affect Internet search, finance and business informatics. Data sets grow in size in part because they are increasingly being gathered by ubiquitous information-sensing mobile devices, aerial sensory technologies (remote sensing), software logs, cameras, microphones, radio-frequency identification readers, and wireless sensor networks.The world's technological per-capita capacity to store information has roughly doubled every 40 months since the 1980s; as of 2012, every day 2.5 exabytes (2.5×1018) of data were created.The challenge for large enterprises is determining who should own big data initiatives that straddle the entire organization.

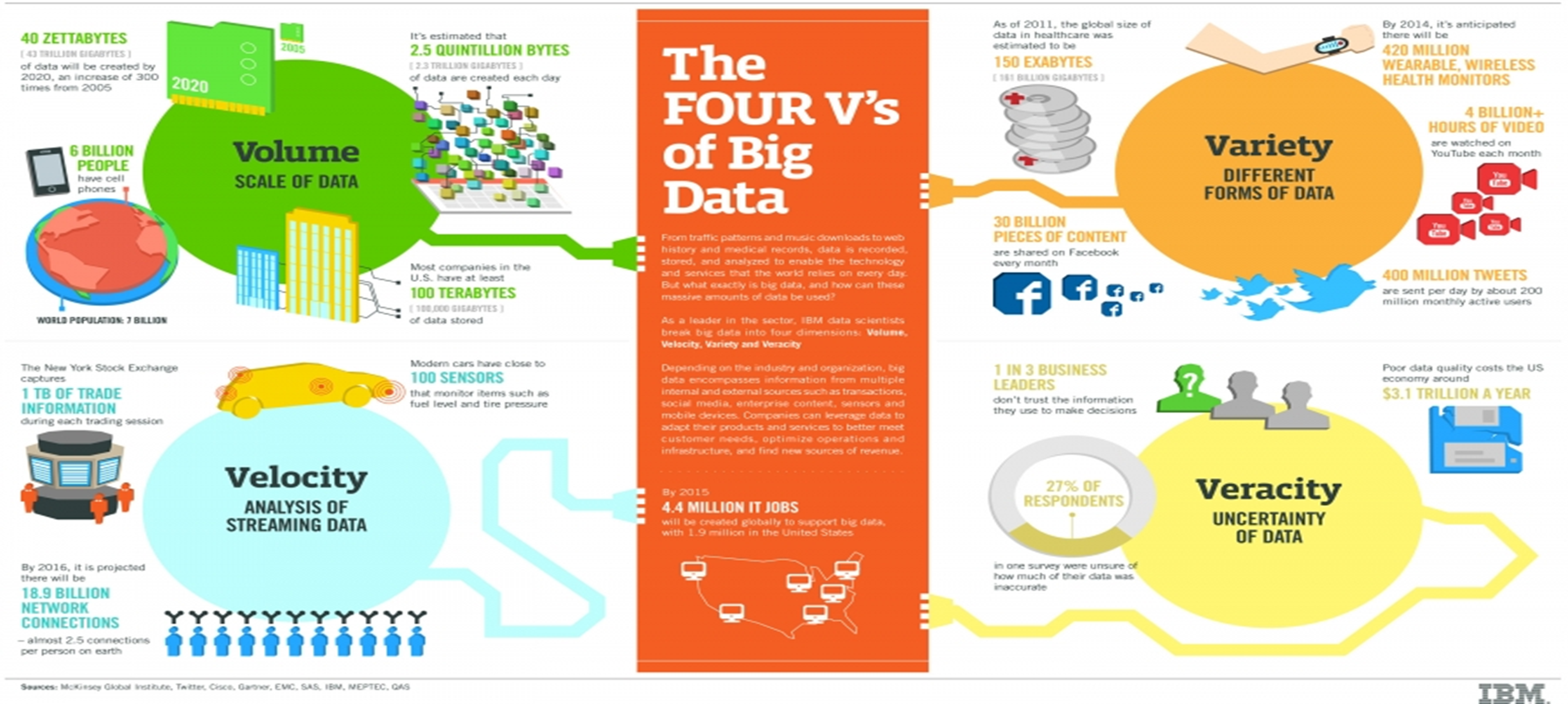

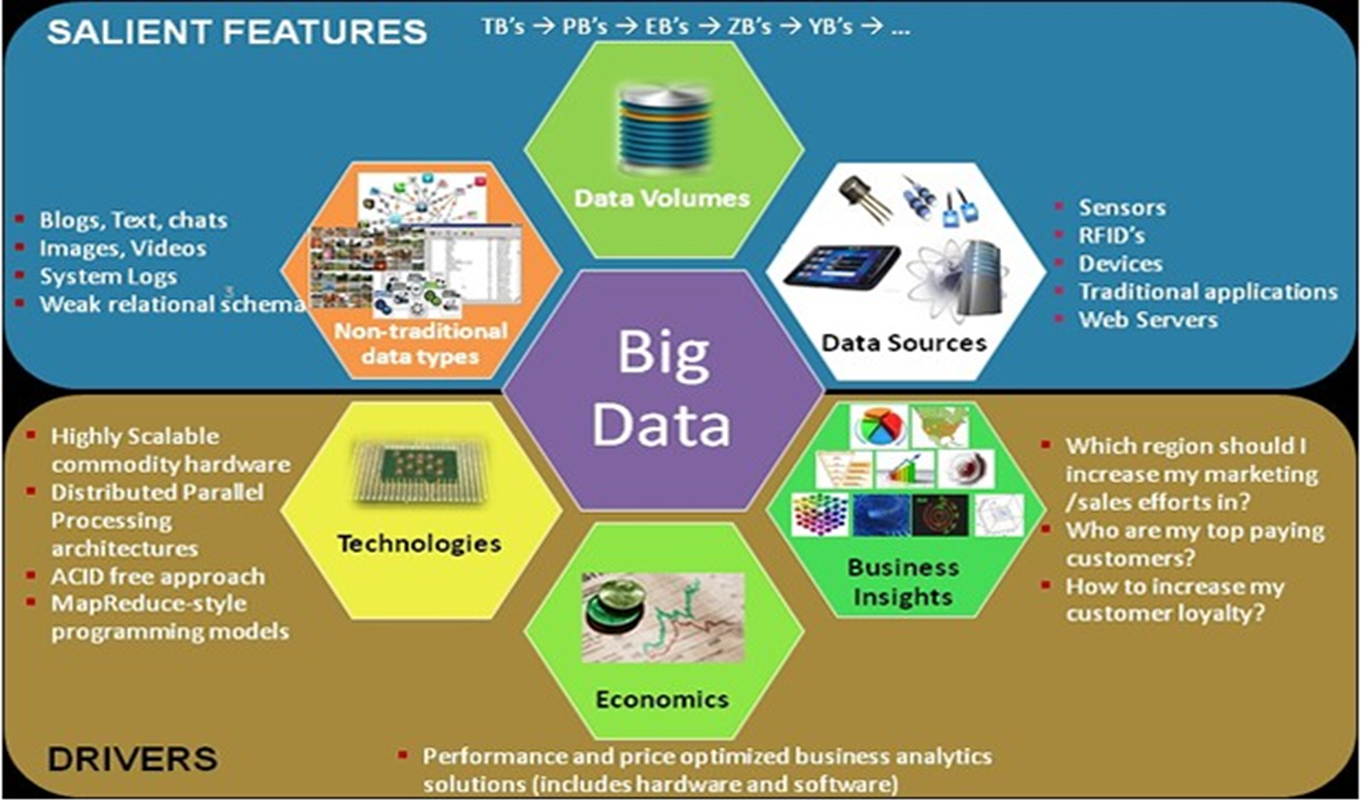

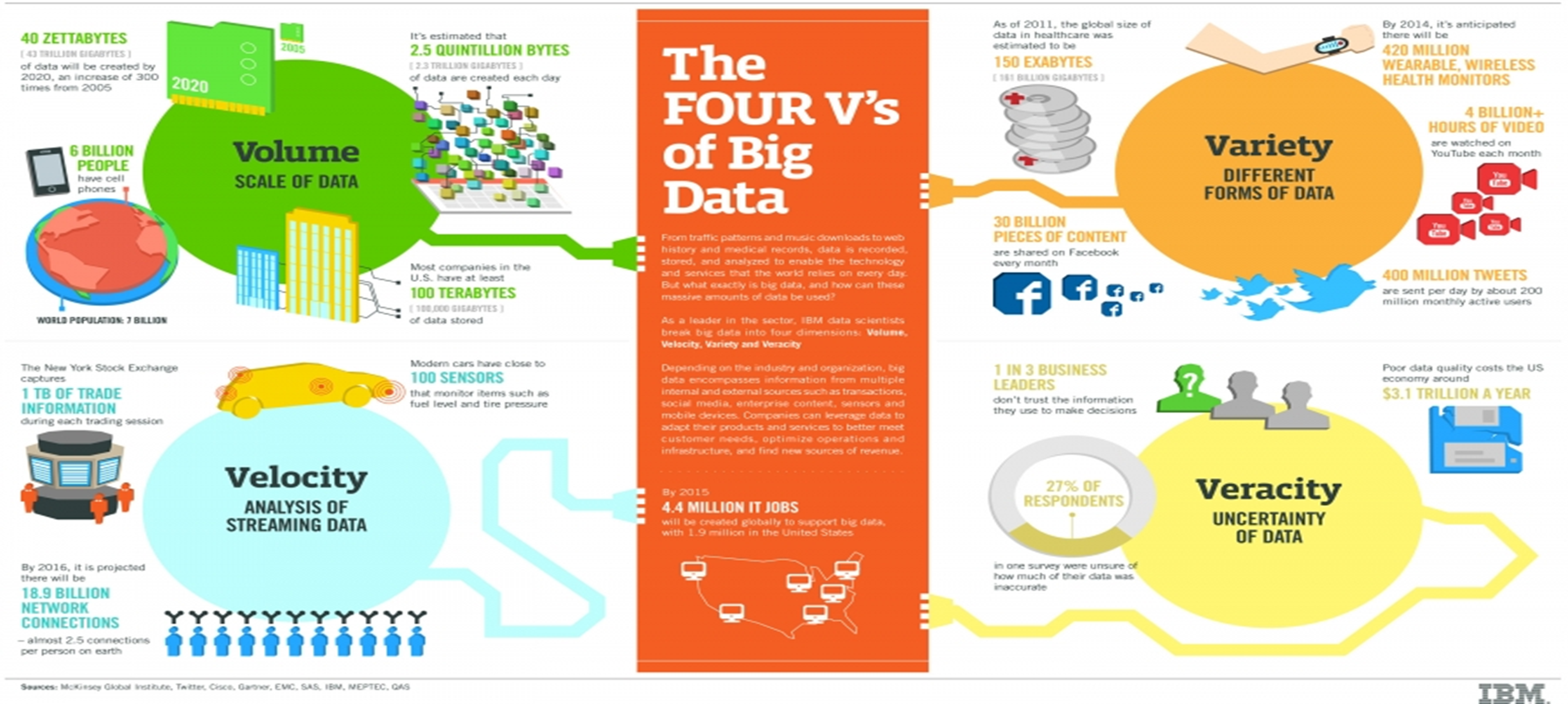

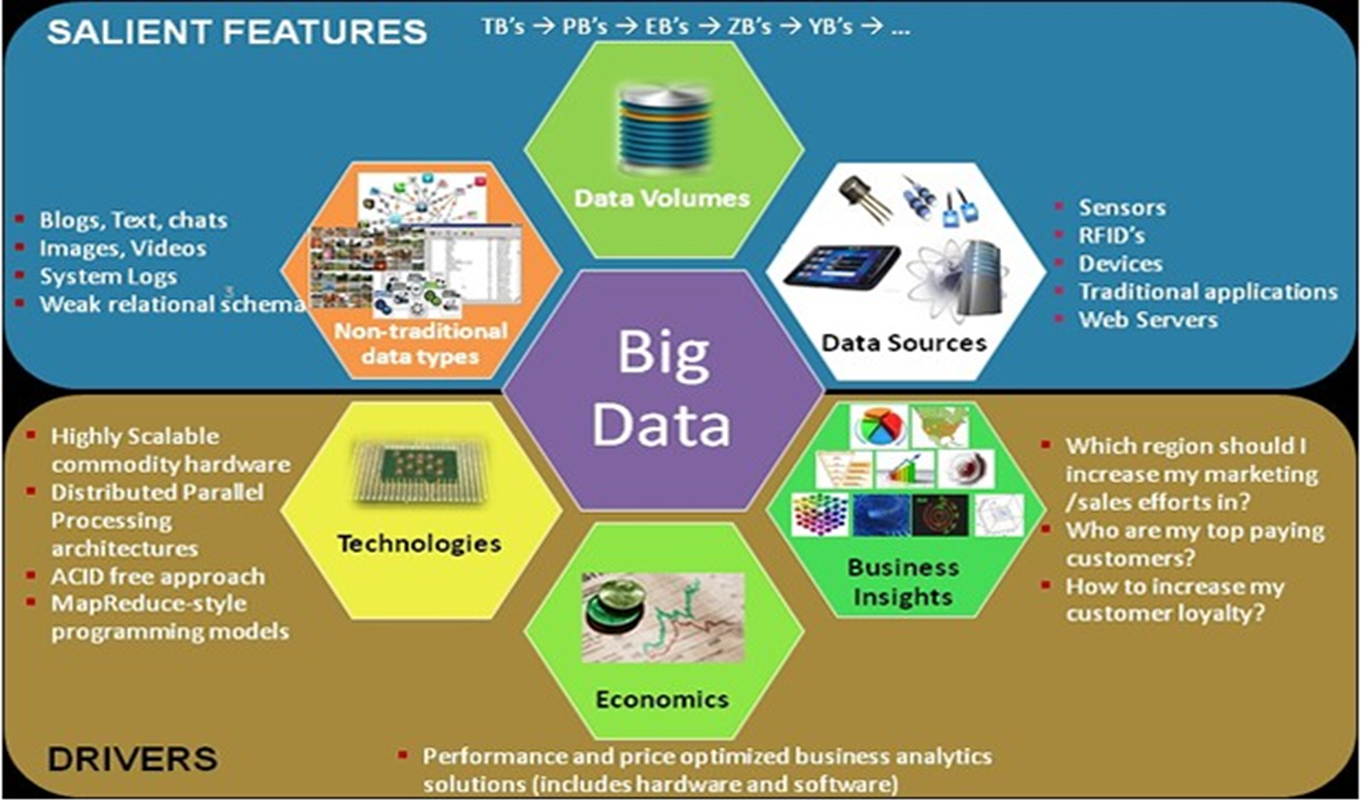

Big data is difficult to work with using most relational database management systems and desktop statistics and visualization packages, requiring instead "massively parallel software running on tens, hundreds, or even thousands of servers". What is considered "big data" varies depending on the capabilities of the organization managing the set, and on the capabilities of the applications that are traditionally used to process and analyze the data set in its domain. "For some organizations, facing hundreds of gigabytes of data for the first time may trigger a need to reconsider data management options. For others, it may take tens or hundreds of terabytes before data size becomes a significant consideration." Big Data is also often characterized as exhibiting "the three Vs" - volume, variety and velocity. It describes digital information that is collectively large in volume (hundreds of terabytes to petabytes), is in a variety of formats (structured, semi-structured, and unstructured), and has high "velocity" (fast data streams that require real-time or near-real-time processing).

IBM: IBM: 4 Vs: volume, variety, velocity and veracity

To these three aspects, we can also add a fourth "V" - value. The value of Big Data is potentially great, but can only be released with the right combination of people, processes, and technologies. Big Data is no longer just an issue for "big science" research projects. Businesses that exploit Big Data to improve their strategy and execution can distance themselves ahead of their competitors. IBM data scientists break big data into four dimensions: volume, variety, velocity and veracity. This infographic explains and gives examples of each.

Gartner:

Big data is high-volume, high-velocity and high-variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision making.

3 vs +1: volume, variety and velocity (+Value) McKinsey:

According to the McKinsey Institute's report "Big data: The next frontier for innovation, competition and productivity", big data refers to datasets where the size is beyond the ability of typical database software tools to capture, store, manage and analyse. And the world's data repositories have certainly been growing. Others believe:

All 5 Vs apply: volume, variety, velocity, veracity and Value

16 Top Big Data Analytics Platforms

Data analysis is a do-or-die requirement for today's businesses. We analyze notable vendor choices, from Hadoop upstarts to traditional database players

http://www.emc.com/big-data/index.htm http://www.emc.com/microsites/big-data-explorer/index.htm#/nav/what http://gigaom.com/2013/01/16/the-market-for-traditional-servers-is-being-enveloped-by-the-cloud/ https://the-bigdatainstitute.com/ http://www.ibmbigdatahub.com/infographic/four-vs-big-data http://www.teradatamagazine.com/v11n01/Features/Big-Data/ ……………………………………..

Processor or Virtual Storage |

Disk Storage |

· 1 Bit = Binary Digit

· 8 Bits = 1 Byte

· 1024 Bytes = 1 Kilobyte

· 1024 Kilobytes = 1 Megabyte

· 1024 Megabytes = 1 Gigabyte

· 1024 Gigabytes = 1 Terabyte

· 1024 Terabytes = 1 Petabyte

· 1024 Petabytes = 1 Exabyte

· 1024 Exabytes = 1 Zettabyte

· 1024 Zettabytes = 1 Yottabyte

· 1024 Yottabytes = 1 Brontobyte

· 1024 Brontobytes = 1 Geopbyte

· 1024

Geopbyte = 1 Saganbyte

· 1024 Saganbyte = 1 Pijabyte

· 1024 Pijabyte = 1 Alphabyte

· 1024 Alphabyte = 1 Kryatbyte

· 1024 Kryatbyte = 1 Amosbyte

· 1024 Amosbyte = 1 Pectrolbyte

· 1024 Pectrolbyte = 1 Bolgerbyte

· 1024 Bolgerbyte = 1

Sambobyte

|

· 1 Bit = Binary Digit

· 8 Bits = 1 Byte

· 1000 Bytes = 1 Kilobyte

· 1000 Kilobytes = 1 Megabyte

· 1000 Megabytes = 1 Gigabyte

· 1000 Gigabytes = 1 Terabyte

· 1000 Terabytes = 1 Petabyte

· 1000 Petabytes = 1 Exabyte

· 1000 Exabytes = 1 Zettabyte

· 1000 Zettabytes = 1 Yottabyte

· 1000 Yottabytes = 1 Brontobyte

· 1000 Brontobytes = 1 Geopbyte

· 1000 Geopbyte = 1 Saganbyte

· 1000 Saganbyte = 1 Pijabyte

· 1000 Pijabyte = 1 Alphabyte

· 1000 Alphabyte = 1 Kryatbyte

· 1000 Kryatbyte = 1 Amosbyte

· 1000 Amosbyte = 1 Pectrolbyte

· 1000 Pectrolbyte = 1 Bolgerbyte

· 1000 Bolgerbyte = 1 Sambobyte

|

|